- Implementation available at: https://github.com/AKartashoff/TPCDI-PDI

The advent of Big Data and the constant need for innovation and improved efficiency in the business world have given a new push to the research area of data warehousing. Recently, there have been several research works on Extract, Transform, Load (ETL) processes —the processes responsible for integrating heterogeneous data deriving from operational activities, cleansing and loading of the processed data to data warehouses— identifying their different perspectives (data-centric view, software view, business process view etc.) and proposing methodologies to facilitate automation in ETL design, implementation and testing. This research boost is further fueled by the promising paradigms of agile design and self-service BI, which additionally give rise to many quality concerns [1].

Nevertheless, obtaining ETL flows for performing experiments is rather challenging. Data processes of a company constitute part of its valuable assets and hence are naturally rare resources usually accompanied by legal restrictions. Even overseeing the confidentiality and regulatory obstacles, despite some notable research efforts, there is currently no de facto standard methodology for modeling and maintaining ETL processes. Thus, obtaining real ETL flows at a usable format for research purposes still remains a burden.

To this end, it is common practice in research to employ the use of test cases deriving from general-purpose benchmarks, such as the standards defined by the Transaction Processing Performance Council (TPC). Regarding ETL activities, the TPC has released TPC-DI - a benchmark for data integration that uses the domain of a retail brokerage firm and aspires to be representative of a broad spectrum of use cases, where data from multiple diverse sources need to be integrated in a concise Decision Support System. Thus, its operational system consists of an OLTP database, a HR system and a CRM system, whilst externally acquired financial and marketing data are also assumed to be available.

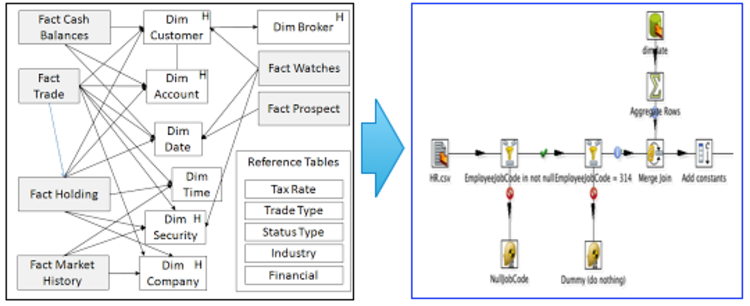

In this context, the TPC-DI specification describes the requirements for the data transformations that extract data from a staging area and after processing and cleansing, load them into a data warehouse (DW) of snowstorm schema with facts and dimensions. This description is intentionally conducted in a relaxed, semi-formal manner, in order not to tie the implementation of the benchmark to specific models and technologies, but instead to provide enough space to different vendors to use their custom technologies and implementations. However, the lack of a structured description of the processes (e.g., in a sql syntax) makes it a tedious task for researchers to use these processes as resources for test cases and examples.

In the TPCDI-PDI project, our goal was to overcome the shortcoming of the unformatted TPC-DI ETL descriptions and to generate a repository of ETL processes that comply with the TPC-DI specification and are developed using open-source technologies. Therefore, we implemented these ETL processes using Pentaho Data Integration (PDI a.k.a. Kettle) to model the workflows; and PostgreSQL for the databases of the staging area and the target DW. The source files for the ETL workflows, the database schemata and external data are publicly available as a GitHub project. In no case do we claim that this particular implementation is flawless or optimal in any sense; we tried to keep it as simple as possible with the only constrain of implementing the basic functionality as described in the TPC-DI specification. Nonetheless, we believe that this repository can act as a useful resource for researchers who require fast access to realistic, executable and easily modifiable ETL flows. In this respect, we would welcome any feedback/additions/modifications/extensions for this basis of processes and we hope to inspire similar initiatives that can foster research in this area.

[1] Theodorou, V., Abelló, A., Lehner, W., Thiele, M.. Quality measures for ETL processes: from goals to implementation. Concurrency and Computation Practice and Experience: Version of record online 2016;.