An introductory view into Prometheus

Prometheus is an Open Source software developed by SoundCloud, commonly used in production servers as a monitoring and alarming tool.

This article offers an introductory view into how it works and how to use it by exposing a running example.

After reading this article, you will understand what Prometheus is, how it works, and have some examples into the mind of its different components. You will also find several links along the article to further extend your knowledge.

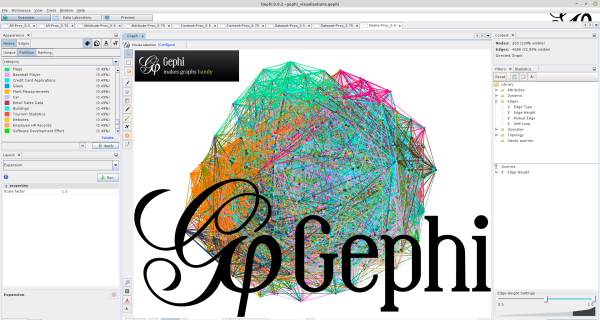

Visualising the data lake using proximity graphs in Gephi

Gephi is a free graph analysis and visualisation tool developed over the Netbeans platform for Java. It supports computing statistics over graphs, applying algorithms to analyse and to visualise the graphs, and to apply filters and queries over the graphs, all using an intuitive graphical-user interface. It has become very popular today due to its strong capabilities and support by the user community. We will demonstrate in this article how to use it to visualise a proximity graph for the data lake.

Tips and tricks on writing experiments

Presenting sound and convincing experimental results is a must in any paper. Besides providing practical evidence that your assumptions are correct, writing experiments is a great way to find bugs and make you rethink an algorithm that made sense in the blackboard. The objective of this blog post is to provide some tips and tricks on the process of writing experiments from my own personal experience. Naturally, any feedback is welcome so don't hesitate to drop an email if you have other interesting recommendations.

Automated Machine Learning and Its Tools

Most of us are well aware of how tedious, time-consuming and error inclined it could be to design a good machine learning pipeline for specific data or problem. In order to achieve such machine learning (ML) pipeline, a researcher has to perform extensive experiments at each stage.

The success of a designed machine learning pipeline highly relies on the selection of algorithms at each level. At each of these steps, the expert has to decide on the selection of the appropriate algorithm or set of algorithms along with their parameters (hyper-parameters) and parameters for the ML model. For a non-expert person, it is tough to make such selection which limits the use of ML models or results in poor predictive outcomes. The rapid growth of machine learning applications has created a demand for off-the-shelf machine learning methods that can be used easily and without expert knowledge.

The idea behind Auto-ML (automated machine learning) is to make the selection of best algorithms (suited for the given data) at each stage of ML pipelines easier and also independent of human input.

Excuse me! Do you have a minute to talk about API evolution?

If you work in an IT-related field, it is impossible not to have heard about APIs. Even if you may have not used them, you have benefited indirectly from them. We can simply describe APIs as a means of communication between software applications. To better explain how they work, we can make an analogy with the Apis (Genus of honey bees) role. One of the most important things Apis species do is plants’ pollination. By transporting pollen from one flower to another, they make possible plants reproduction. In API world, plants are software, pollens are request/response between software, and Apis are APIs.

Measuring mongoDB query performance

MongoDB has become one of the widely used NoSQL data stores now. The document-based storage provides high flexibility as well as complex query capabilities. Unlike in Relational Database Management Systems (RDBMS), there is no formal mechanism for the data design and query optimization for MongoDB. Hence, we have to rely on the information provided by the datastore and fine-tune the performance by ourselves. The query execution planner and the logger offers valuable information to achieve the goal of having optimal query performance in MongoDB.

Journal Response Time (JRT) - Nightmare of PhD students!

In the world of research or otherwise known as the world of "publish or perish", publishing a paper is of "life or death" importance. One of the most relevant measures of success in this world is the number of publications one has, and therefore the goal of every PhD student is to maximize it. Yet, given the time constraints a PhD student has, one has to perfectly plan the venues where he/she is going to publish. This is not a trivial problem. The problem aggravates when one has to publish a Journal article. Therefore, we developed a tool that facilitates students to find the right journal given their time constraints.

3 Big Data & Analytics Trends to watch out for in 2018

In the age of Machine Learning and AI, companies are racing towards better services and innovative solutions for better customer experiences. Businesses realize the need to take their big data insights further than they have before in order to serve, retain and win new customers. 2017 has been a big year for Big data analytics with lots of companies understanding the value of storing and analysis huge stream of data collected from different sources. Big data is in a constant mode of evolution. It is expected that the big-data market will be worth $46.34 billion by 2018.

Mining similarity between text-documents using Apache Lucene

One of the main challenges in Big Data environments is to find all similar documents which have common information.To handle the challenge of finding similar free-text documents, there is a need to apply a structured text-mining process to execute two tasks: 1. profile the documents to extract their descriptive metadata, 2. to compare the profiles of pairs of documents to detect their overall similarity. Both tasks can be handled by an open-source text-mining project like Apache Lucene.

Use of Vertical Partitioning Algorithms to Create Column Families in HBase

HBase is a NoSQL database. It stores data as a key-value pair where key is used to uniquely identify a row and each value is further divided into multiple column families. HBase reads subset of columns by reading the entire column families (which has referred columns) into memory and then discards unnecessary columns. To reduce number of column families read in a query, it is very important to define HBase schema carefully. If it is created without considering workload then it might create inefficient layout which can increase the query execution time. There are already many approaches available for HBase schema design but in this post, I will present to use of vertical partitioning algorithms to create HBase column families.

War between Data Formats

Big data introduces many challenges and one of them is how to physically store data for better access time. For this purpose, researchers have proposed many data formats which store data into different layouts to give optimal performance in different workloads. However, it is really challenging to decide which format is best for a particular workload. In this article, I am presenting latest research work on these formats. It covers research paper, benchmarking, and videos of the data formats.

GraphX- Pregel internals and tips for distributed graph processing

Apache Spark is becoming the most popular data analysis tool recently. And hence understanding its internals for better performant code writing is very important for a data scientist. GraphX is a specific Spark API for Graph processing on top of Spark. It has many improvements for graph specific processing. Along with some basic popular graph algorithms like PageRank and Connected Components, it also provides a Pregel API for developing any vertex-centric algorithm. Understanding the internals of the Pregel function and other GraphX APIs are important to write well-performing algorithms. Keeping that in mind, in this blog, we will elaborate the internals of Pregel as implemented in GraphX.

NUMA architectures in in-memory databases

NUMA is a memory access paradigm used to build processors with stronger computing power, where each CPU has its own memory but access to other CPU memories is also granted. The pay-off is that accessing local memory is faster than remote. As a consequence, in-memory database performance appears to be subject to the data placement.

Data Warehouses, Data Lakes and Data 'LakeHouses'

Data warehouses and data lakes are on the opposite ends of the spectrum of approaches of data stores for combining and integrating heterogeneus data sources, supporting the business analytical pipeline in organizations. These data stores are the cornerstone of the analytical framework. Both approaches have advantages and drawbacks, thus, is there room in this spectrum for approaches combining both strategies?

Enter the World of Semantics: using Jena to convert your data to RDF

The semantic web of data and the realm of Linked Open Data (LOD) is growing every day … and at its core is the Resource Description Framework (or RDF for short). RDF is a W3C recommended standard for representing data and metadata.

TPC-DI ETLs using PDI (a.k.a. Kettle)

TPCDI-PDI: overcoming the shortcoming of the unformatted TPC-DI ETL descriptions by generating a repository of ETL processes that comply with the TPC-DI specification and are developed using open-source technologies.

Students' welcome from the DTIM research bunker

Before the talk about research and blog posts about the work we do, it might be nice to share a piece of atmosphere in a few words about us. This post brings some insights from the student perspective.

Welcome to the DTIM blog

Welcome to the DTIM research group website! and specifically to the research group blog.